AI-generated images are becoming increasingly prevalent in today’s digital landscape, presenting new challenges in combating disinformation and identity fraud. To address this issue, the teams at cortAIx, Thales’s AI accelerator, have developed a groundbreaking metamodel capable of detecting AI-generated deepfakes. This innovative solution was unveiled as part of a challenge organized by France’s Defence Innovation Agency (AID) during the European Cyber Week in Rennes, Brittany.

Deepfake technology, fueled by AI platforms such as Midjourney, Dall-E, and Firefly, poses a significant threat in various sectors, including cybersecurity and financial fraud. Studies have warned of the potential financial losses that could result from the misuse of deepfakes for identity theft and manipulation. In response to this growing concern, Thales’s metamodel provides a comprehensive approach to identifying and authenticating images, helping to combat the spread of disinformation and safeguard against malicious activities.

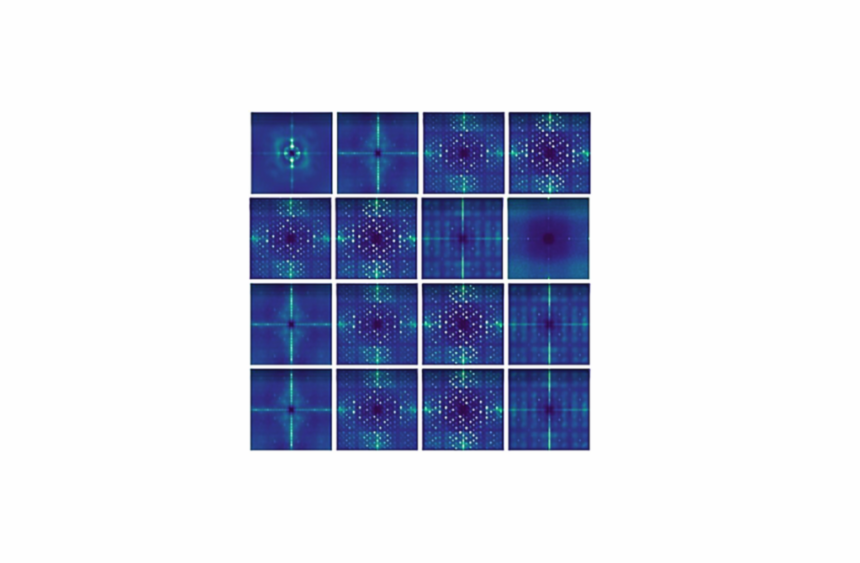

The Thales metamodel leverages a combination of models to assign authenticity scores to images, distinguishing between real and fake content. By integrating machine learning techniques, decision trees, and evaluations of model strengths and weaknesses, the metamodel analyzes images with precision and accuracy. Key methods employed in the detection process include the CLIP method, which connects image and text to identify inconsistencies, the DNF method, which utilizes diffusion models to detect deepfakes, and the DCT method, which analyzes spatial frequencies to detect hidden artifacts.

Led by Christophe Meyer, Senior Expert in AI and CTO of cortAIx, Thales’s AI accelerator, the dedicated team behind the metamodel has demonstrated a remarkable technological advancement in the fight against identity fraud and image manipulation. With over 600 AI researchers and engineers, Thales’s cortAIx division continues to push the boundaries of AI innovation, developing cutting-edge solutions to address emerging threats in the digital landscape.

In addition to deepfake detection, Thales’s Friendly Hackers team has developed BattleBox, a toolbox designed to assess the robustness of AI-enabled systems against potential attacks. By implementing advanced countermeasures such as unlearning, federated learning, model watermarking, and model hardening, Thales remains at the forefront of AI security and defense.

Thales’s commitment to research and development is reflected in its ongoing investment in key innovation areas, including AI, cybersecurity, quantum technologies, cloud technologies, and 6G. With a global presence and a focus on making the world safer, greener, and more inclusive, Thales continues to lead the way in advancing technology for a better future.

For more information about Thales and its innovative solutions, please visit the official website. Press inquiries can be directed to Thales’s media relations team at pressroom@thalesgroup.com.

This article was originally distributed by African Media Agency (AMA) on behalf of Thales and can be found on their website.